Mac Studio

Enterprise AI in Action

Ready to Deploy AI That Actually Works?

▼

4 Real-world Challenges

1 Powerful Solution

▼

Challenge 1|When Models Are Too Large for Memory

Traditional GPU memory limitations force you to fragment large language models, sacrificing performance and wasting development time.

Mac Studio shatters these limits with up to 512GB unified memory—a shared pool accessible to every processing unit. Deploy 130B+ models intact. Experience seamless inference powered by MLX optimization. One system. Full model. Zero compromises.

Challenge 2|When Budget Is Limited but AI Cluster Is Needed

Enterprise AI infrastructure comes with enterprise price tags. Traditional GPU clusters can drain budgets before delivering value, forcing tough choices between scale and affordability.

Research proves a different path: Mac Studio clusters deliver 22x better cost-performance than conventional GPU servers for MoE workloads. Same capabilities, fraction of the investment. Build your AI infrastructure without breaking the bank.

Challenge 3|When AI Needs to Leave the Server Room

AI shouldn’t be chained to server racks. Real-world applications demand mobility—on film sets, in field research, at remote facilities. Traditional solutions are too power-hungry, too loud, too stationary.

Mac Studio redefines deployment flexibility: 150-200W power draw, 3.6kg compact design. Whisper-quiet operation. Deploy anywhere—from corporate offices to production locations. Take powerful AI wherever innovation happens.

Challenge 4|When Open Source Becomes the Mainstream Choice

The enterprise AI landscape has shifted decisively: 94% adoption rate, 89% choosing open-source. Companies leveraging open-source AI report 25% higher ROI while dramatically reducing total cost of ownership. Vendor lock-in is yesterday’s problem.

Mac Studio embraces this future with native support for open-source ecosystems and advanced MoE architectures. Your choice. Your models. Your competitive advantage.

Mac Studio Storage Configuration

Internal Storage

Ultra-fast internal storage serves as your hot data zone—where active models, training checkpoints, and live projects reside for instant access.

- Millisecond-level OS and application loading

- Active dataset samples and model checkpoints

- Real-time project materials for video production

- Zero-bottleneck data supply for GPU/CPU

Internal NVMe

16TB

Beyond Internal Storage Limits

Modular, high-capacity external storage systems that transform Mac Studio from desktop workstation to enterprise AI platform

Mac Studio’s built-in 16TB NVMe excels at handling hot data—but what happens when your AI datasets grow to hundreds of terabytes, or when teams need shared access to massive model repositories?

Accusys offers two external storage architectures:

Personal Expansion or Team Sharing

Choose the solution that fits your workflow—without sacrificing speed, reliability, or the elegant simplicity Apple users expect.

Combining Mac Studio with Accusys storage arrays

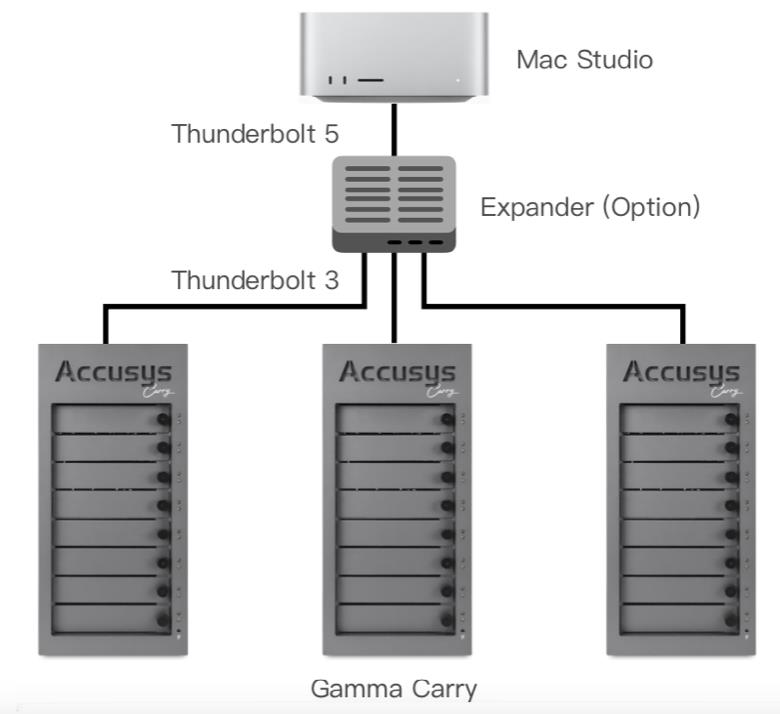

【Option 1】

Gamma Carry 12

Personal Workstation High-Capacity Expansion

Direct connection to Mac Studio, massive capacity storage solution designed for individual users. Perfect for AI developers and content creators who need extensive local storage.

● 360TB raw capacity per unit

● Hardware RAID 5/6 fault tolerance

● Thunderbolt 5 direct connection

● Expandable to 1PB+ with multiple units

● macOS RAID 0 aggregation for maximum performance

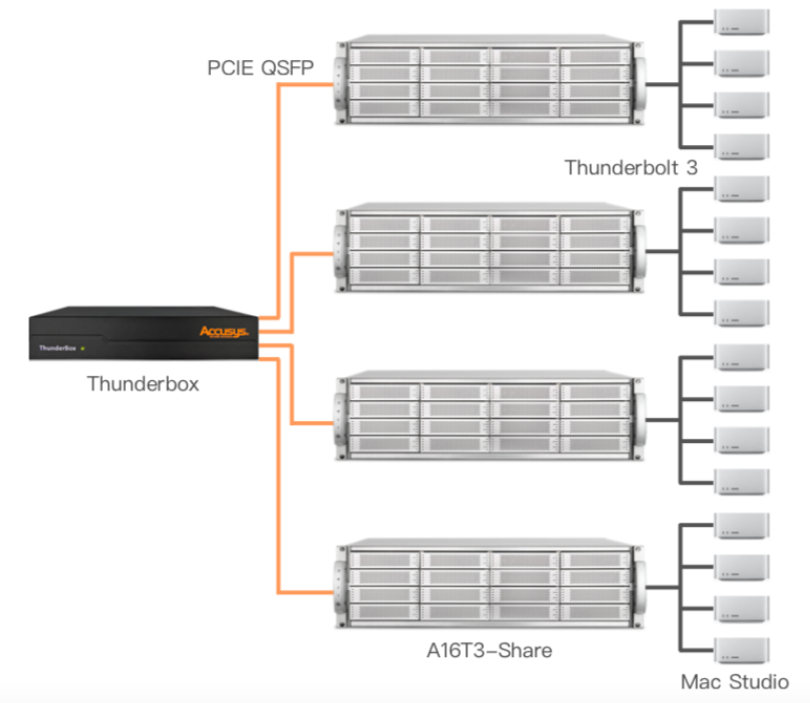

【Option 2】

A16T3-Share

Team Collaboration SAN Architecture

Shared storage solution designed for multi-user collaboration. Enable entire teams to access the same data simultaneously over the network.

● Supports up to 16 workstations network access

● Compatible with Apple Xsan cluster file system

● Conflict-free concurrent read/write operations

● Metadata control ensures data integrity

● Single source of truth for teams

Use Cases

Media & Image

AI Production

Run Stable Diffusion, speech-to-text, and image enhancement locally with unified memory for full model loading. Accusys Gamma Carry 12 delivers multi-GB/s striped throughput supporting simultaneous 4K/8K video inference and editing workflows.

Enterprise Private

LLM Services

Finance, healthcare, and public sectors deploy models like Falcon, DeepSeek R1, or Qwen MoE on-premises for data sovereignty and compliance. Accusys shared file systems enable cluster collaboration without version conflicts.

Edge Computing &

Field Inference

Deploy Mac Studio with Accusys portable storage at manufacturing sites, media locations, or drone operations for instant image recognition and content generation—no cloud required. Multi-site backup enables hybrid cloud architectures.

Research &

Education Platforms

Test MoE architectures with Mac Studio clusters—no expensive H100 servers needed. Students run quantized 7B-13B models locally with MLX-LM tools for hands-on learning and rapid experimentation.

Why Choose Mac Studio + Accusys

The Best Solution for Enterprise On-Premises AI Computing

Breaking the Limits of Traditional GPU Architecture

Mac Studio combined with Accusys storage delivers a high-performance and cost-efficient on-premises AI deployment solution. Through unified memory architecture and open-source model support, it enables a flexible, secure, and future-ready AI infrastructure for enterprises.

Unified Memory Architecture

Supports up to 512 GB unified memory. CPU, GPU, and Neural Engine share the same pool, allowing large models exceeding 13 billion parameters to be fully loaded at once without partitioning.

Outstanding Price-Performance Ratio

According to experiments by a National Taiwan University research team, a Mac Studio cluster costs only 1/22 of a Databricks GPU server cluster while delivering 22 times higher throughput per dollar in MoE workloads.

Low-Power Edge Computing

Each unit consumes only 150–200 W and weighs about 3.6 kg, ideal for deployment in offices, labs, or on-site production for real-time edge inference.

Open-Source Model Ecosystem

Compatible with leading open-source models such as Falcon, DeepSeek R1, and Qwen MoE. Research shows 89 % of enterprises adopt open-source AI, achieving an average ROI 25 % higher than proprietary solutions.

Data Sovereignty and Compliance

Fully on-premises deployment keeps sensitive data internal, meeting strict requirements for finance, healthcare, and government sectors. Combined with ABM + MDM for enterprise-grade centralized management.

Scalable Expansion

Start with a single unit and scale up to a 2–8 node Mac Studio mini-cluster. Integrate with Accusys storage nodes or hybrid deployments with cloud GPUs.